Mappia's Networking Performance

Mappia operates your Magento store on multiple servers within a highly-available, fault-tolerant, and scalable Kubernetes cluster. However, this scalability brings important performance considerations. While your store may be more "scalable" and "performant under load" compared to other hosting providers, it is possible that the performance of individual requests may be significantly slower than other providers.

This performance degredation is almost always due to network latency (Network I/O).

Some examples of I/O include:

- Networked filesystem reads/writes (pub/media & var)

- Network calls to Redis

- Network calls to MariaDB

Fortunately, these types of issues are fairly common, and solving them is usually not overly difficult.

Below is a more detailed explanation of the key differences between a single-server deployment of Magento and deployment of your Magento store on Mappia.

The most important difference from single-server Magento

The following visualization depicts the single most important difference between Mappia and a typical Magento deployment.

Single-Server Application

+------------+

| Server |

+------------+

| |

| |

+---+-----+---+

| Magento |

| MariaDB |

| Redis |

+-------------+Multi-Server Application

+-------------+ +-------------+ +-------------+

| Server 1 |-------| Server 2 |------| Server 3 |

+-------------+ +-------------+ +-------------+

| | | | | |

| | | | | |

+---+-----+---+ +---+-----+---+ +---+-----+---+

| Redis | | Magento | | MariaDB |

+-------------+ +-------------+ +-------------+Why does this matter?

In Mappia, leveraging the power of the Kubernetes orchestrator, we aim to distribute as much code as possible across all available servers. This means that, most of the time, Magento is not guaranteed to run on the same server as another connected application.

TIP

While techniques like taints and tolerances can be implemented to improve this behavior, relying solely on these methods is not advisable.

To understand the consequences of this, let's compare what happens when Magento tries to query MariaDB under both circumstances.

Single Server Connections

+------------+

| Server |

+------------+

| |

| |

+---+-----+--------------------+

| Magento -----------------\ |

| MariaDB (unix-socket) <--/ |

| Redis |

+------------------------------+In the single server model, Magento can access the MariaDB server by reaching it via a Unix socket. This method is extremely fast, operating solely within the filesystem, and performance-comparable to reading and writing to the underlying file system. This process is extremely fast (on the order of nanoseconds or single-digit microseconds).

Multi Server Connections

Now let's contrast this with the multi-server model:

+-------------+ +-------------+ +-------------+

| Server 1 |-------| Server 2 |------| Server 3 |

+-------------+ +-------------+ ^ +-------------+

| | | | | | |

| | | | | | |

+---+-----+---+ +---+-----+---+ | +---+-----+---+

| Redis | | Magento | --/ | MariaDB |

+-------------+ +-------------+ +-------------+In that single arrow, there's much more going on than may be obvious, so let's delve deeper into it.

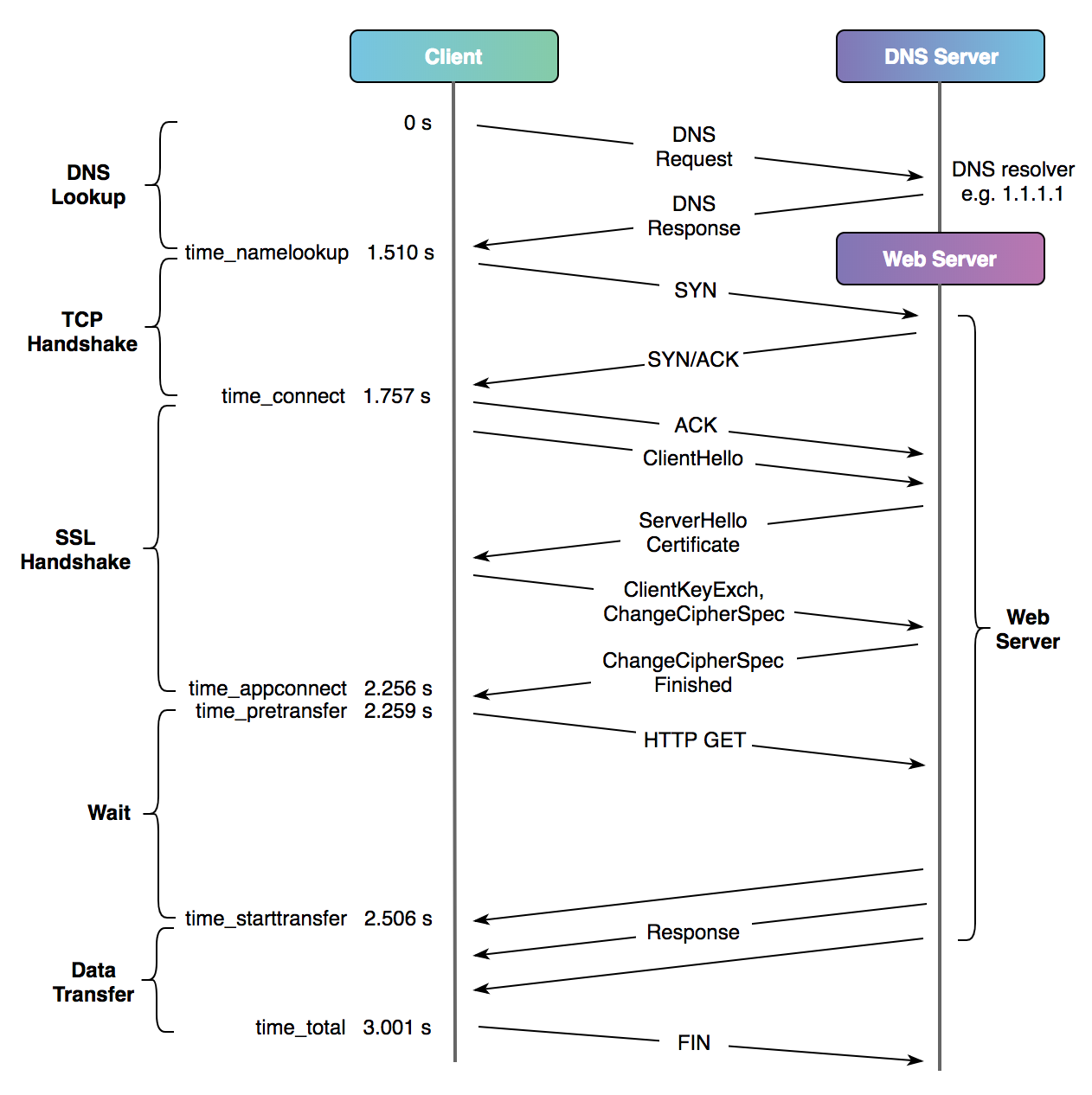

Take a look at the following image, paying attention to the number of times we jump from one side (via arrows) to the other.

When we open a TCP connection to a given server, we actually make 3 unique round-trips before we even request any data (excluding the TLS protocol layer)!

So, not only do we have the upfront cost of 3 trips to initialize the connection, but we also have the single round-trip cost for any further requests on that connection.

In a multi-server model, this time adds up very quickly. Connecting and transferring data between servers, even within the same local network, incurs noticeable time. Usually, this takes hundreds of microseconds or even single-digit milliseconds. If you perform this process a thousand times in a single request, you have already consumed 1 second simply retrieving data.

Summary

This comparison is the most critical aspect for understanding performance differences between Mappia and other single-server hosting providers. What may appear "fast" on a single server could likely seem "slow" in a multi-server environment.

This is where your code comes into play. This kind of problem will hinder your application from scaling, regardless of how many servers you allocate at the problem.

While remedying poorly-written code is a critical aspect to addressing this problem at scale, we also are actively working with cloud providers to uncover methods and techniques to drive this latency lower and lower. However, latency will always exist to some extent.